Digital legal talks 2021: Abstracts & papers

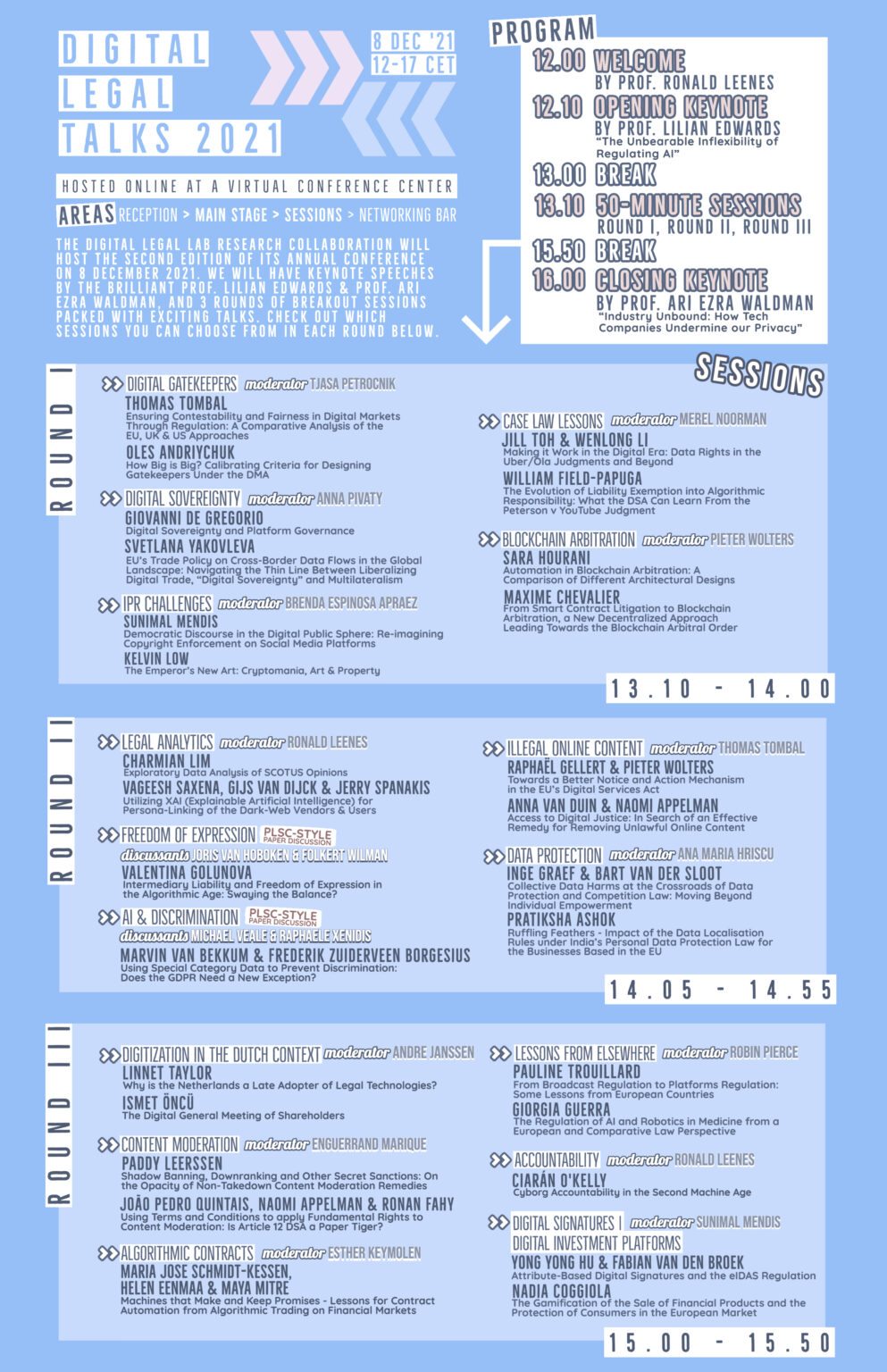

Digital Legal Talks 2021 will take place online on 8 December 2021. On this page, you can find an overview of the talks happening during three rounds of 50-minute breakout sessions.

program

Talks in Round I

Making it Work in the Digital Era: Data Rights in the Uber/Ola Judgments and Beyond

Jill Toh & Wenlong Li

Since its proliferation, the platform economy has been a testbed for workplace surveillance technologies. In the transport and food delivery sectors, platform companies practice widespread and continuous data collection and processing, while utilising algorithmic management systems for granular, managerial control of the workforce which operates at scale. Opaque proprietary algorithmic and automated decision-making systems are used to allocate work, calculate prices and wages, ensure compliance with a platform’s ever-changing terms and conditions, aggregate ratings and reviews, as well as (semi-) automatically deactivate and dismiss its workers. In Europe, the continuous and intensifying struggle by workers, trade unions, and labour activists for basic worker rights have manifested in the growing number of court cases related to employment status. In more recent times, these workers are gradually realising the potential importance and necessity of data rights. The Uber/Ola judgments from the Amsterdam District Court represent the first case(s) brought forward by workers exercising their data rights, in order to strengthen their negotiation positions in the overall struggle and resistance in the platform economy. This therefore represents the first opportunity to examine and contest the usefulness of data rights in employment and particularly platform-based work contexts. The paper will start by conceptually addressing the shift from (and interactions with) worker rights and data protection rights in the current political economy of data. It will proceed to critically evaluate the Uber/Ola judgments, identifying the misconceptions, controversies and progress made by the court. It will show that despite a lower court decision subject to appeal, these judgments showcase a wide range of practical problems facing the data subjects and particularly a dearth of attention and guidelines on these common problems. As such, through the lens of labour, the paper aims to evaluate the promises and pitfalls of data rights through this judgment, and other (legal) avenues, such as Article 88 GDPR and the proposed AI regulation. It will conclude with some recommendations related to improving clarity on data rights, and takes a broader, critical view to existing (and proposed) technology regulation in the context of the labour and the platform economy in addressing power asymmetries.

THE EVOLUTION OF LIABILITY EXEMPTION INTO ALGORITHMIC RESPONSIBILITY: What the Digital Services Act Can Learn From the Peterson v YouTube Judgment

William Field-Papuga

Since December 2020, there have been two major challenges to the liability exemptions under the eCommerce Directive. Firstly, the proposal for a Digital Services Act, published in December 2020, by the European Commission, attempts to make the first fundamental reconsideration of the eCommerce Directive since 2000. Secondly, the Court of Justice of the European Union (“CJEU”) significantly changed the scope of the liability exemptions in its recent judgement Frank Peterson v YouTube; Elsevier v Cyando AG (2021) (“Peterson”) from July 2021.

Firstly, this paper aims to identify several significant controversies that emerged under the eCommerce Directive regarding the liability exemptions. This includes the absence of a so-called “Good Samaritan” clause and the development of a more complex knowledge-based exemption from liability standard. In addition, the encouragement of proactive general monitoring, as well as the controversial distinction between active vs neutral/passive platforms, have become key issues litigated in many disputes before the CJEU and the European Court of Human Rights (“ECtHR”).

Secondly, the article will demonstrate that the Peterson judgment fundamentally changes the previous understanding of the distinction between active vs neutral/passive platforms. It also alters what constitutes actual knowledge for the purposes of the liability exemption under Article 14 of the eCommerce Directive. In doing so, the judgment creates fewer opportunities for holding online platforms legally responsible for the content posted by their users. Simultaneously, the judgement establishes a concrete link between Article 3(1) of the Copyright Directive 2001/29 (“Copyright Directive” hereafter), with its positive requirement for platforms to refrain from communicating protected works to the public, without the authorization of its author, and Article 14 of the eCommerce Directive, with its liability exemption. The judgement implies that the articles should be interpreted consistently, and thereby creates a clearer path for holding platforms liable for its decisions in structuring its services. This signifies a judicial shift away from regarding liability exemption as the main vehicle in intermediary liability, and towards focusing on algorithmic responsibility. This article, argues that the requirements under Article 3(1) of the Copyright Directive, which is currently lex specialis, applying specifically to intellectual property infringements, could become lex generalis given its general relevance to other forms of illegal content.

Thirdly, it contends that the Digital Services Act makes solid progress toward developing greater positive obligations and a more detailed duty of care for online platforms. However, it repeats many of the controversial elements in the eCommerce Directive. For example, it does not adopt a differentiated notice-and-action procedure for different types of content. It also largely repeats verbatim the active vs neutral/passive requirement, which was (and still is) the subject of significant dispute under the eCommerce Directive. Furthermore, it creates mixed messaging regarding voluntary content moderation by platforms. It could therefore benefit from adopting the principles reflected in the Peterson ruling.

How Big is Big? Calibrating Criteria for Designating Gatekeepers Under the DMA

Oles Andriychuk

The DMA is designed to be applied only to a very narrow group of undertakings with the strategic position in the digital markets: gatekeepers. A perfectly correct calibration of gatekeepers implies a situation where all undertakings, which have an entrenched strategic position in a specific market are captured by the definition. At the same time, the definition should not extend to the ‘second tier’ of the biggest market players. This category comprises the undertakings, which are the most plausible new entrants in the gatekeepers’ markets.

Capturing those who should not be captured would not only harm these undertakings. It would also harm substantially inter-platform competition in these markets inasmuch as it would prevent a meaningful possibility for the potential competitors to challenge the gatekeepers. Defining the quantitative and qualitative thresholds too narrowly would allow some of the de-facto gatekeepers not to be covered by the DMA. Defining them too widely would designate as gatekeepers those, which are in fact the main real and potential challengers of the status quo.

Defining the scope of the addressees of the rules is an endeavour requiring a surgical precision. In this talk I aim to outline and compare different options, discussed by the stakeholders engaged in the legislation on the Digital Markets Act.

Addressing dependence to gatekeepers: a comparative analysis of the EU, UK and US approaches

Thomas Tombal

In a society where individuals increasingly spend time on the internet and where businesses mobilise this resource to increase the reach of their products and services, large online platforms have become, for many, unavoidable actors. As the power of these actors keeps growing, it is increasingly argued that competition policy alone cannot address all the systemic problems that they create, and that in digital markets where quick reactions are indispensable, ex ante legislation must be adopted to avoid the apparition of such systemic problems in the first place. In this regard, there seems to be a consensus across the globe that legislative action must especially be taken against a specific sub-set of large online platforms. This is due to the fact that they increasingly act as gateways or gatekeepers between business users and end users, and that this leads to significant dependencies of many (business) users on these gatekeepers. In light of the above, this contribution will aim at answering the following research question: “How do the EU, UK and US legislators intend to address the growing issue of dependence to gatekeepers?”. To do so, Section 2 will attempt to clarify the scope of the digital platforms that would be subject to these regulatory initiatives, and will outline that there are some discrepancies in these various approaches. Then, Section 3 will outline the general approach that is taken in each of these jurisdictions to address this dependence issue, and will highlight the variations in the selected options. In this regard, reference to the provisions imposing data sharing will be made for illustration purposes. Finally, Section 4 will conclude by summarising the main discrepancies between these different approaches.

Automation in Blockchain Arbitration: A Comparison of Different Architectural Designs

Sara Hourani

Blockchain technology was first introduced at the end of the 2000s when an anonymous person using the name of Satoshi Nakomoto released their whitepaper on the Bitcoin cryptocurrency. The specificity of this technology is that it functions on a decentralised basis as there is no use of a trusted intermediary or central authority. Blockchain technology is used in smart contracts to automate transactions. Smart contracts are software codes that include the terms and conditions of a contract and that run on a network leading to a partial or full automated self-execution and self-enforcement of the contract. In essence, a smart contract is a software programme that is stored on the blockchain. Smart contracts can be used in supply chain management, trade finance and insurance for example.

In the context of these different transactions, it would be relevant to include a dispute resolution clause in the smart contract to prompt the parties to resolve their differences with regards to the performance of the contract. This type of dispute resolution has received widespread attention for the resolution of specific low-value claims, especially in the context of cryptocurrency and commercial smart contract-related disputes.

Different platforms have integrated automation in the blockchain-based dispute resolution procedure and have adopted different designs for automation in the process. To illustrate, the Kleros blockchain-based dispute resolution procedure has adopted automation at every stage of the procedure. Another example is the CodeLegit blockchain-based arbitration procedure that clarifies at what stages of the procedure automation can be used.

Research Question:

This paper’s research question therefore queries the extent to which automation is incorporated in the design of current blockchain-based/smart contract dispute resolution systems, and how automation can be embraced in these procedures to comply with fairness and equity standards that are currently found in traditional private dispute resolution procedures, such as arbitration. The paper additionally examines the extent to which it is necessary to adopt automation in dispute resolution.

Plan Outline:

Part one of the paper focuses on a comparative analysis of the use of automation in the blockchain dispute resolution platforms chosen for this study. Part two carries out an analysis of the compatibility of such automation with the law on arbitration in different legal systems, and assesses the fairness of the automated aspects of the procedure in the context of these legal systems.

From Smart Contract Litigation to Blockchain Arbitration, a New Decentralized Approach Leading Towards the Blockchain Arbitral Order

Maxime Chevalier

Blockchain dispute resolution has led the crypto economy to the surge of a new form of dispute resolution: blockchain arbitration. Resolving disputes on-chain is becoming necessary as national and international legal frameworks are not adapted to the charac- teristics of blockchain transactions. More importantly, the legality of smart contracts is highly debated under various national laws. This new sui generis form of arbitration should not be assimilated with traditional arbitration. Indeed, blockchain arbitration might not fit within the traditional international arbitration framework, and it does not have to. Because blockchain arbitration operates as an oracle, the decision from the jurors automatically triggers or modifies the smart contract. Through the blockchain technology, arbitration has reached the possibility to automatically enforce arbitral awards with no need to rely on state courts’ authority. Observing the legal theories of international arbitration, blockchain arbitration enshrines the representation of delocal- ized arbitration, but simultaneously leaves room for a new representation of arbitra- tion: decentralized arbitration. In the next decades, we will see the surge of the block- chain arbitral order, an independent legal order anchored in the Lex Cryptographia. This essay advocates for the recognition of the blockchain arbitral legal order and tries to draw its contours.

EU’s trade policy on cross-border data flows in the global landscape: navigating the thin line between liberalizing digital trade, “digital sovereignty” and multilateralism

Svetlana Yakovleva

This contribution makes three main points. First, it contends that although the policy concerns behind the EU, US and China’s restrictions on data flows are different, the degree of regulatory autonomy these jurisdictions are willing to give up in order to promote cross-border data flows is more similar than is often assumed. To make this argument, the article compares the EU, US and China’s approaches to governing cross-border data flows in broader domestic legal frameworks beyond the rules on commercial privacy. Second, this article argues that the diffusion of the US model of governing cross-border data flows around the globe (including the UK), the recent trade law obligations on data flows the EU has undertaken vis-à-vis the UK and the upcoming reform of the UK data protection law may lead to eclipse of the so-called “Brussels Effect” in data protection. Third, and finally, this article reflects on the implications of the increased resort of the trading partners to national security-type exceptions as a counterbalance to obligations on cross-border data flows for the future of the multilateral trading system. It observes that, although the substantive international trade commitments on data flows are deepening, trading parties protect their offramps to escape these deeper obligations to protect their domestic policy priorities and advance their geopolitical goals.

Digital Sovereignty and Platform Governance

Giovanni de Gregorio

The notion of sovereignty is one of the constitutive pillars of states and the exercise of public powers on a certain territory. And, even in the digital age, states are increasingly building their narratives of digital sovereignty. In this case, platform governance is playing a paradigmatic role in influencing strategies to govern the digital environment on a global scale.

On the western side of the Atlantic, still the First Amendment provides a shield against any public interference leading US companies to extend their powers and standards of protection beyond its territory. Despite some attempts to deal with platform power at the federal level (e.g. PACT Act), and even at the local (e.g. Florida), nonetheless, such a liberal approach does not only foster private ordering but also hides an indirect and omissive way to extend constitutional values beyond territorial boundaries. Rather than intervening in the market, the US has not changed its role while observing its rise as a liberal hub of global tech giants. Regulating online platforms in the US could affect the smooth development of the leading tech companies in the world while also increasing the transparency of the cooperation between the governments and online platforms in certain sectors like security, thus, unveiling an invisible handshake. The Snowden revelation have already underlined how far public authorities rely on Internet companies to extend their surveillance programme and escape accountability. Put another way, the US digital sovereignty would benefit from private ordering and the invisible cooperation between public and private actors.

On the opposite, China has always controlled its market from external interferences rather than adopting a liberal approach or exporting values through international economic law. China is promoting and resembling the western conception of the Internet while maintaining control over its businesses. Baidu, Alibaba and Tencent, also known as BAT, are increasingly competing with the dominant power of Google, Apple, Facebook, Amazon, or GAFA. The international success of TikTok is an example of how China aims to attract a global audience of users while supporting its business sector. Besides, the adoption of the Digital Silk Road increasingly makes China a relevant player beyond territorial boundaries. The Huawei model is based on exporting technological power supplying digital infrastructure even in peripheral areas. Put another way, China is only partially opening to digital globalisation while is maintaining control over the network architecture. This twofold approach is called the Beijing effect. The cases of China and Russia show how these countries propose alternatives for governing digital technologies which tend to reflect their values. Such influence has not only been domestic but also international. For instance, China has already tried to dismantle the western multi-stakeholder model by proposing to move internet governance within the framework of the International Telecommunications Union.

Within this framework, the Union has already shown its ability to influence global dynamics, so that scholars have named such attitude as the ‘Brussel effect’. It should not surprise that the Union has also started to build its narrative about digital sovereignty based on ensuring the integrity and resilience of our data infrastructure, networks and communications aimed to mitigate dependency on other parts of the globe for the most crucial technologies. At the same time, the Union has become a global regulator of online platforms. From the first period of regulatory convergence based on neo-liberal positions at the end of the last century, the U.S. and the Union have taken different paths. On the eastern side of the Atlantic, the Union has slowly abandoned its economic imprinting. While, at the end of the last century, the Union primarily focused on promoting the growth of the internal, this approach has been complemented (or even overturned) by a constitutional democratic strategy characterising European digital constitutionalism. The adoption of the General Data Protection Regulation has been a milestone in constitutionalizing European data protection after the Lisbon Treaty. Likewise, the Digital Services Act is another paradigmatic example showing the shift of paradigm in the Union towards more accountability of online platforms to protect European democratic values. These two examples show the intention of the Union to propose a global model characterised by a sustainable democratic strategy limiting platform powers. In particular, the European framework of content and data protection is finding its path on a global scale, while raising as a model for other legislation in the world. Furthermore, the adoption of the GDPR has led a growing number of companies to voluntary comply with some of the rights and safeguards even for data subjects outside the territory of the Union. The intention to overcome territorial formalities also drove the ECJ in Google Spain or in Schrems saga, respectively by defining a right to be forgotten online and invalidating the Commission’s adequacy decisions to ensure the effective protection of the fundamental rights to privacy and data protection as enshrined in the European Charter. Nonetheless, the ECJ has clearly defined the limit to the extension of European rules in Google v. CNIL and Glawischnig-Piesczek v Facebook.

This research aims to study the relationship between digital sovereignty and platform governance with a specific focus on the European Union which is increasingly proposing a third way of digital sovereignty driven by democratic constitutional values. By looking in particular at the European strategy to govern platform governance from a global perspective, this research contributes to defining how far platform governance influences digital sovereignty. The main findings of this paper would contribute to defining the values characterising European policy and influencing the European model of digital sovereignty.

Democratic discourse in the digital public sphere: Re-imagining copyright enforcement on online social media platforms

Sunimal Mendis

Online social media platforms constitute a critical component of the contemporary digital public sphere. As the entities who govern these digital spaces and the content shared on them, online content-sharing service providers (OCSSPs) have the capacity to direct and influence the discourse taking place within their platforms and the ability to act as de facto arbiters of communicative freedom in the digital public sphere.

In the EU, Article 17 of the Copyright in the Digital Single Market Directive (CDSM) of 2019, has come under fire for imposing a heightened degree of liability on OCSSPs for copyright infringing content shared by users over their platforms. Concerns have been raised that Article 17 compels OCSSPs to adopt a more stringent approach towards preventing the sharing of unauthorized content (potentially with the aid of algorithmic content moderation tools), thereby increasing the risk of collateral censorship and seriously undermining users’ ability to engage with creative and cultural content in ways that promote robust democratic discourse.

Using Article 17 of the CDSM as an illustrative case, this essay argues that the current EU legal framework on copyright enforcement on online social media platforms is deeply entrenched in the utilitarian viewpoint of copyright law. Consequently, it accords primacy to protecting the economic interests of copyright owners (by ensuring their ability to obtain a reasonable return on their creative and/or entrepreneurial investments) and renders the protection of user freedoms (such as their ability benefit from legally granted exceptions and limitations to copyright) peripheral to this core economic aim.

By extension, the role of online content-sharing service providers (OCSSPs) is limited to ensuring that copyright owners can obtain fair remuneration for content shared over their platforms (role of ‘content distributors’) and preventing unauthorized uses of copyright-protected content (‘Internet police’). No duties are imposed on them for protecting user freedoms to engage in robust democratic discourse.

This essay proposes that, the social planning theory of copyright law – which affirms the communicative function of copyright and its role as a tool for promoting democratic dis-course- provides a conceptual basis; firstly, for (re)affirming the protection of user freedoms as being endogenous — and in fact fundamental — to copyright’s purpose and secondly, for re-framing the role of OCSSPs as facilitators and promoters of democratic duties, thereby allowing the imposition of positive enforceable duties on OCSSPs to ensure that user freedoms are preserved and protected on their platforms.

The Emperor’s New Art: Cryptomania, Art & Property

Kelvin Low

The latest wave of cryptomania has brought us yet another acronym after ICOs (initial coin offerings) – NFTs (non-fungible tokens). Touted as a means to render readily replicable digital art (and possibly other objects) rare and scarce, NFT-mania reached its apogee when the artist Beeple sold a collage titled Everydays: the First 5,000 Days through Christie’s on 11 March 2021 for US$69m, making it the third most expensive piece of art sold by a living artist. But did the buyer actually acquire, through the NFT, any art? What is art abstracted from the medium upon which it is embedded and dissociated from its copyright? Can such a dissociated abstraction be meaningfully owned? What does the concept of fungibility entail and can a token be permanently conferred fungibility or non-fungibility at the point of its mining or minting? Once the technical process of minting an NFT is properly understood, it will be seen that NFTs are not what they seem. NFTs may be property, but they are not property in art.

Talks in Round II

Access to Digital Justice: In Search of an Effective Remedy for Removing Unlawful Online Content

Anna van Duin & Naomi Appelman

The publication and dissemination of unlawful online content and the lack of effective legal remedies has proven to be a persistent problem. This paper aims to shed light on the problem by combining the perspectives of online speech regulation and access to justice, explaining why a solution is so difficult to find. It is based on an empirical study for the Dutch government into procedural routes and obstacles to quickly take down content that causes personal harm – i.e., a wide variety of Article 8 ECHR claims that impact people’s private life. The study consisted of a representative survey of the Dutch population, expert interviews and a legal analysis. In this paper, the issues identified in the Dutch procedural context are analysed in light of the broader EU legal framework and policy debates. As such, this paper ties in with the ongoing debate on the Digital Services ACT (DSA) and its eventual implementation and enforcement, which is meant to protect European citizens when they use digital services and platforms in particular.

Our study shows the heterogeneity of the problems people are confronted with when dealing with a broad range of harmful online behaviours. However, current EU regulation as well as the proposed DSA take a blanket approach when it comes to harmful content. Insufficient attention is paid to the divergent perspectives of people who are directly and personally affected by such content and how they relate to various options to contest it. Even though a significant minority of the Dutch population (15%) has personal experience with harmful content, the available means of recourse – both judicial and out-of-court – are often not utilized. Specific obstacles concern, inter alia, the specialised nature of the field and the difficulty for injured parties to find the appropriate actor to address.

The open and decentralised structure of the internet, as well as the powerful position of digital platforms, make it particularly complex to regulate and control content moderation in general. As discussed in this paper, the main challenges for ‘access to digital justice’ are the need for speed and scalability. A trade-off emerges between accessibility on the one hand and the existence of institutional and procedural safeguards for the protection of fundamental rights on the other, especially the freedom of expression and due process. There is no single procedure that can properly balance all the divergent rights and interests concerned. Instead, a problem-oriented approach is proposed, where the contestation of harmful online content is seen as a collection of interconnected issues that require a tailored solution. This paper provides a steppingstone for more empirical research into people’s needs and perceptions in this respect.

This paper – to be published shortly as a chapter in an edited volume on ‘Frontiers in Civil Justice’ – is the first prong of an interdisciplinary research project on content moderation and access to justice in the context of the DSA. The project combines legal, social and behavioural perspectives to collect data on people’s exposure to harmful content on websites and social media as well as the obstacles they face when it comes to the removal of such content. The project seeks to understand the

individual and systemic factors at play, to critically analyse the role and responsibilities of platforms and to make policy recommendations about the design of effective responses and remedies.

Towards a better notice and action mechanism in the EU’s Digital Services Act

Raphaël Gellert & Pieter Wolters

The goal of this contribution is to discuss the notice and action mechanism enshrined in the European Commission’s 2020 proposal for a Digital Services Act (DSA).

Since the adoption of the e-Commerce Directive, notice and action mechanisms have become an increasingly important tool for the limitation of illegal content online. They allow for the notification and subsequent removal or disabling of access to the content at stake. Even though such mechanisms are not fully regulated at EU level, they have been used by hosting services and have been enshrined in some member states’ legislation as well as in some EU sectoral legislation. If adopted, the DSA will address this gap as its Article 14 formally codifies, harmonises, and builds upon existing practices and rules. Such a mechanism would then apply to all hosting services (barring exceptions for micro and small enterprises) and to all types of content. Beyond the mechanism itself, the proposed DSA also provides for additional rules and safeguards such as a statement of reasons (Article 15), an internal complaint-handling mechanism (Article 17) and out-of-court dispute settlement (Article 18). For the purpose of this contribution these safeguards are considered an inherent part of the notice and action mechanism.

The key question this contribution addresses is whether the proposed notice and action mechanism achieves an adequate balance of the various interests at stake (i.e., content providers, notifiers, users of online services, hosting services), including adequate safeguards for the protection of fundamental rights (in particular freedom of expression), judicial oversight and access to justice through effective redress mechanisms. We propose several improvements to the current proposal, including a better facilitation of anonymous notices, stronger redress mechanisms for notifiers, the extension of the internal complaint mechanism to all hosting service providers and a stronger emphasis on the role and accessibility of the courts.

Ruffling Feathers – Impact of the Data Localisation Rules under India’s Personal Data Protection Law for the Businesses Based in the European Union

Pratiksha Ashok

From the 1960s to the 2021 EU-India Leaders’ Meeting, the focus of EU-India relations has been on strengthening strategic partnerships between the ‘Unions of Diversity’. At the India-EU Summit, 2020, one of the agreed agendas is to enhance convergences between the regulatory frameworks to ensure a high level of personal data and privacy protection to facilitate safe and secure cross border data flows. Both the Unions have taken steps to ensure data protection for their citizens.

The General Data Protection Regulation, 2016 (GDPR) stands as a torchbearer in data protection legislation. In EU law, GDPR is the Regulation on data protection and privacy and the transfer of personal data providing individuals control of their data and streamlining business use of data.

In India, the Supreme Court, in 2017, provided one of its landmark decisions, the Puttuswamy Judgement. One of the questions considered was whether the right to privacy was a fundamental right. The Court decided that privacy is rooted in human dignity enumerated in Article 12 of the UDHR and Article 17 of the ICCPR and is a fundamental right. In light of this decision, in 2018, the Srikrishna Committee was set up to provide its Report to create the Personal Data Protection Bill, 2019 (PDPB).

When the GDPR was introduced, businesses across the globe woke to a new standard of data protection. Anu Bradford coined the term ‘Brussels Effect’ to describe the expansive application of EU standards worldwide. Indian IT Companies such as Infosys and Wipro, which adopted GDPR norms in their business conduct, illustrate this phenomenon. This article looks in the other direction and attempts to ascertain the potential impact of the PDPB on European businesses. It goes one step ahead of existing research on comprehending the differences, focusing on the noteworthy conceptual difference of data localisation and its potential impact on European businesses.

Data sovereignty is the idea that data is subject to the laws within the nation it is collected. However, data localisation builds on data sovereignty which requires collection, processing and storage to first occur in the nation where it was generated. India is not the first country to introduce data localisation requirements. Under the PDPB, critical personal data must be processed in India, and sensitive personal data must be stored in India. However, they can be transferred outside India under specific conditions.

The documents préparatoires for the Srikrishna Committee Report recognised the need to treat different types of personal data differently. In an attempt to balance the rights of its citizens, privacy, and prevention of foreign surveillance, the Srikrishna Committee Report recommended that critical personal data be processed in India and sensitive personal data be stored in India. Contrastingly, under the GDPR, data localisation is not required unless international data transfer requirements are not met. European businesses needing to adapt to PDPB provisions should be aware of the Draft National E-Commerce Policy, which advocated for data localisation . This Policy would be the equivalent of the proposed Digital Services Act. The Policy focuses on the role of data in India’s growth and the future. The Policy also refers to data sovereignty and the concept of data in the e-commerce sector, which may affect European businesses. European businesses must consider the impact of the data localisation requirements- both potentially positive and negative. Businesses must consider the costs of such requirements and the potential impact on innovation and their current and future investments in India. Any compliance requirement that affects businesses could also affect the economy, including trade and economic growth. Businesses would have to find the right balance between the cross-border flow of data and access to data. Businesses could also potentially benefit from the data localisation requirement with the ease of access to data and the support of local law enforcement.

This research is only the first step to understanding data localisation scope and counties’ requirements before businesses. Countries are balancing sovereignty, and the free flow of data and businesses are now placed in a situation to balance a similar objective of the free flow of data and such compliance requirements.

Collective data harms at the crossroads of data protection and competition law: moving beyond individual empowerment

Inge Graef & Bart van der Sloot

In an era of big data, harms caused by data technologies can no longer be effectively addressed under the predominant regulatory paradigm of individual empowerment. Even a sophisticated consumer cannot fully protect herself against collective harms triggered by others’ privacy choices or by technologies creating competitive harm without processing personal data or targeting individuals. While data protection and competition law can be applied more proactively to address such harms, difficulties are likely to remain. We therefore submit that stronger regulatory interventions are required to target collective, and sometimes competitive, harm from technologies like pervasive advertising, facial recognition, deepfakes and spyproducts.

Exploratory Data Analysis of SCOTUS Opinions

Charmian Lim

Exploratory data analysis is one of the first and most essential steps in any data experimentation project. Performing exploratory data analysis to map out the main characteristics of a dataset helps with formulating hypotheses, provides guidance on which tools and models to choose, and identifies possible problem areas. This presentation is a report on exploratory data analysis on the SCOTUS Opinions dataset from Kaggle.com, performed with Natural Language Processing (NLP) techniques applied with the Python programming language. First, I report basic information about the dataset, such as size, dimensions, and features. Next is an analysis of text statistics, including word frequency, sentence length, and opinion length. Then, n-grams – contiguous sequences of n-number of words – are also analyzed. Sentiment analysis is also performed to determine if the opinion texts are positive, negative, or neutral. Further, the SCOTUS opinions are scored for readability. Finally, problems in data quality are identified. This exploratory data analysis reveals challenges arising from the data and possible avenues for further comprehensive research on the SCOTUS data.

UTILIZING XAI (Explainable Artificial Intelligence) FOR PERSONA-LINKING OF THE DARK-WEB VENDORS & USERS

Vageesh Saxena, Gijs Van Dijck & Jerry Spanakis

Our research emphasizes an XAI(Explainable Artificial Intelligence)-based baseline that can link, connect, and identify vendors on the Dark Web (Alphabay, Dreams, and Silk Road-1) markets by analyzing the writing styles in their advertisements. We train our model to identify vendors that 1) migrate their business across multiple markets and 2) create aliases within the markets to stay hidden from law enforcement. Without being explicitly trained on the unforeseen data from two of our Dark Web markets, our model shows a promising performance of 0.7957 on weighted F1 for over 5,836 vendors. Furthermore, we argue that since we trained our model in an open-set classification setting, such a model can be deployed for real-world scenarios to link and even identify vendors to an existing criminal database. Finally, our framework looks into model explanations providing linguistic and stylometric insights to help LEA investigations.

Intermediary liability and freedom of expression in the algorithmic age: swaying the balance?

Valentina Golunova

This is a PLSC-style paper discussion. Please read the paper (provided below) prior to participating in this session.

The “safe harbour” regime enshrined in the e-Commerce Directive is currently at a crossroads. As modern hosting providers have developed technical capabilities to tackle illegal user-generated content in a swift and efficient manner, both policymakers and judges are struggling to ascertain whether these providers should still be exempted from liability for transmitting or storing such content. This paper argues that the sweeping development of algorithmic content moderation prompts the constriction of the “safe harbour” enjoyed by providers and could ultimately hamper freedom of expression on the Internet. By proactively removing content with the help of algorithmic tools, providers might become ineligible for the liability exemption as their activities could be seen as active rather than merely technical or automatic. Furthermore, providers that have deployed algorithms to weed out third-party infringements might be subject to more strenuous tests of knowledge and expeditious action. The advent of algorithmic content moderation is also gradually diluting the prohibition of general obligation obligations as it leads courts to assign large-scale inspection duties to providers. It is contended that the ongoing corruption of liability protections might induce providers to err on the side of caution and engage in the disproportionate removal of legitimate content. In view of this pressing issue, this paper calls for legislative action to reaffirm the boundaries of the “safe harbour” and safeguard freedom of expression online.

Using special category data to prevent discrimination: does the GDPR need a new exception?

Marvin van Bekkum & Frederik Zuiderveen Borgesius

This is a PLSC-style paper discussion. Please read the paper (provided below) prior to participating in this session.

Organizations may use artificial intelligence to make decisions about people for a variety of reasons, for instance, to select the best candidates from many job applications. However, AI systems can have discriminatory effects when used for decision-making. AI systems could reject applications of people with a certain ethnicity, while the organization did not plan such ethnicity discrimination. But in Europe, an organization runs into a problem when it wants to assess whether its AI system accidentally leads to ethnicity discrimination: the organization does not know the applicants’ ethnicity. In principle, the GDPR bans the use of certain ‘special categories of data’ (sometimes called ‘sensitive data’), which include data on ethnicity, religion, and sexual preference. This paper asks: What are the arguments for and against a law that creates an exception to the GDPR’s ban on collecting special categories of personal data, to enable auditing AI for discrimination? We analyse whether the GDPR indeed hinders the prevention of discrimination, and we map out the arguments in favour of and against introducing such a new exception to the GDPR. The paper discusses European law, but the paper can be relevant outside Europe too, as many policymakers in the world grapple with the tension between privacy and non-discrimination policy.

Cyborg Accountability in the Second Machine Age

Ciarán O’Kelly

AI is not simply an exogenous shock that is to be managed within the parameters of public accountability as we understand it now. Rather, AI is continuously further embedded as an endogenous feature of administrative work. This means that the measures and standards we apply to public management of societal challenges are already infused with algorithmic processes. This will not simply have an impact on the kinds of information people use, but on the institutional and social logics through which they imagine their roles.

Answering the question of what accountability means when there are ‘robots on the team’ will require our getting to grips with what it is to be accountable in the first place. I argue that informal accountability, which act as the locus for understanding what more formal accountability mechanisms entail, is the central facet of all accountability, even of the kinds of mechanisms and structures that more formal accountability institutions articulate.

I take a relatively ‘thick’ line on informal accountability relationships here, describing them as a class of ‘plural subjectivity’. Such relationships, I think, are always rooted in collective intentions and understandings because intentions and understandings always emerge in group contexts. Accountability is sited in group agency. It is underpinned and informed by the emergence of joint commitments and intents between actors (following Gilbert, 1989) in the context of how they understand and thus navigate their terms of work. Formal accountability mechanisms are formed from joint commitments and understandings in themselves. They are team efforts.

This thick perspective on informal accountability gives us a route into how AI is and will become endogenous in accountability relationships and from there in governance more broadly. Accountability being a ‘we’ phenomenon gives us a better sense of how algorithmic processes, each possessing a degree of agency, can be ‘part of the team.’

Talks in Round III

From Broadcast Regulation to Platforms Regulation: some Lessons from European Countries

Pauline Trouillard

Numerous legal scholars and sociologists have pointed out the ongoing process of propertization and datafication of the informational economy, and the troubling control of the information society by private actors, whose goal is to make money, and not to cure the public sphere. The process of search, content aggregation and social networking in the so called ‘platforms’ produces an intermediated experience for the users, in which private actors have an infinite power. The majority of the scholars have highlighted this process as new and exceptional, and the goal of this article is, on the contrary, to establish a comparison with the old school of speech regulation, in particular with Television. As the TV market is highly concentrated in the hands of specific individuals and corporations, these market actors can use their power to broadcast the political messages that best support their interests and decide to invite only people they politically agree with. In order to increase their profits, they can also sacrifice the quality of public debate on the altar of sensationalism and entertainment. While an efficient regulation system for the platform economy is slow to emerge, this comparison can prove helpful in order to formulate some normative recommendations for platforms’ regulation, building on Television regulatory framework in Europe and in the US.

In the first part of this article, I draw the comparison between broadcasting business model and platform business model and explain why this comparison is appropriate. One aspect of the similarities between the two is the so-called attention tragedy: societies seeking profits are invading spaces previously walled up from commercial exploitation. The business model of mass-media companies and platforms is based on selling time, space and audience to advertisers, and this comes at the cost of the qualities of the content provided to this audience.

Another common feature between platforms and mass-media sector is the concentration, that are yet not due to the same causes: the concentration on the mass-media sector used to result from the scarcity of ressources, combined to the costs of both production and distribution, while the concentration on the platforms sector mainly results from the ‘platform effect’ that comes from the two-sided market.

In the second part of this article, I build on Bollinger and Barron’s comparison between the Press and Broadcasting to reject a determinist approach of media regulation based on the exceptionalism of each media. I argue that platforms regulation has a lot to learn from the broadcast system of regulation, not only American but also European. This story is particularly important, the article argues, because unlike broadcasters who broadcast only in their countries and are regulated, as a consequence by their country’s Law, the very structure of digital media allows tany citizen of any country (except the countries in which they are forbidden) to use them. It means that the owners of digital platforms are not only subjected to their domestic law, but also to the domestic law of their users’ country. Subsequently, the American culture of the First Amendment is not the only one to take into account when shaping the policy regulating platforms.

The opposition between the American model and the European model is in that regard, striking. In Europe, the Courts created a fiction, assuming that freedom of speech of the people would be respected if citizens had access to pluralist and diverse information and programming. This required an important State regulation on private broadcasters in order to organize the debate in the public sphere, including a publicly-funded broadcaster to provide a content independent from private owners and advertisers. In the US, after the end of the Fairness doctrine, the broadcasters’ restrictions are very light, with regards to the rationality, accuracy and pluralism of the news transmitted but also with regards to the diversity and quality of programming.

In the last part of the Article, I build on the normative solutions adopted for the broadcasting system in Europe and in the US to propose some normative solutions for platform regulation, including regulation of algorithms to ensure for the platforms’ users the access to a plurality of tendencies, or the adoption of a co-regulation between the States and the platforms.

The Regulation of AI and Robotics in Medicine from a European and Comparative law perspective

Giorgia Guerra

The talk aims at discussing key and critical tort law issues that have arisen from artificial intelligence and robotic medical applications. The most recent European regulatory trajectories – ultimately traced by the Proposal for the Artificial Intelligence Act April 21 2021 – will be taken into account in comparative perspective with the instructive experience of the US litigation on robotic surgery, which was foregoing on account of its cutting-edge and accelerated enhancement of the field.

The recognition and analysis of the criticalities about products’ malfunctioning as well as the focus on other producers’ duties will offer food for thought on the suitability of the legal rules planned by the European Authorities on the ground of the empirical features of the new step of technological progress in the field in question.

Attribute-based digital signatures and the eIDAS Regulation

Yong Yong Hu & Fabian van den Broek

The eIDAS Regulation provides the European legal framework for, among other things, electronic signatures. This framework distinguishes three types of electronic signatures: the ordinary, advanced and qualified electronic signatures. Such signatures are connectable to the identity of the signatory. This connection, at least for qualified electronic signatures, is made using certificates. However, such certificate-based electronic signatures (‘CBS’) are quite inflexible and do not support non-identifying attribute-based signatures (‘ABS’). ABS is a form of identity management where the electronic signature is not necessary connectable to an identity. The use of such ABS is more privacy-friendly and can contribute to the principle of data minimisation, because the signatory decides which information he or she shares with a relying party. This contribution examines the legal aspects of ABS and analyses to what extent such (non-identifying) ABS fit into the system of the eIDAS Regulation.

The gamification of the sale of financial products and the protection of consumers in the European market

Nadia Coggiola

An increasing number of financial services companies are now offering their online services to consumers in the European market, at competitive prices. But this is not good news.

In fact, those same financial services companies often exploit the weaknesses of consumers, with possible severe economic consequences.

I am especially referring to those internet platforms engineered to enable investments on financial markets mostly using mobiles and tablets, which advertise online trading describing it as a fun, smart, easy activity, which does not require any financial literacy and furthermore can be shared with friends and acquaintances using already existing social platforms or the same investment platform.

Although these new investment platforms offer the general public of private investors/consumers the same instruments generally offered by traditional online bank or investment services, they transform the act of investing, due to the features of the application interface, from an operation supposedly based on conscious deliberation into an act mostly driven by the search for entertainment and social relationships.

That is to say, we are assisting the “gamification” and “socialization” of investment activities, with the related data driven influences of the same investment platforms on the investors, that certainly may entail serious social and economic consequences. An evolution that was certainly not foreseeable by the European legislator when the rules on online investment services for consumers were written.

The aim of my paper is therefore to investigate the impact of the use of the new generation of investment tools on consumers’ behaviour, to ascertain what are the shortcomings of the application of the existing European legislation on the distance marketing of financial products in these cases and to predict the possible future European legislative features in the sector.

The existing provisions of the Directive 2002/65/EC of the European Parliament and of the Council of 23 September 2002 concerning the distance marketing of consumer financial services and its national implementation rules, are indeed certainly no longer able to provide the needed protection to consumers when they invest using internet platforms that actively interact with them, pushing them to invest, giving them unrequested feedback or feedback not directly concerning their investments and in the end transforming the investment activity into a social and recreational one.

That Directive was in fact clearly written bearing in mind the state of technology current at the time it was enacted, two decades ago. Therefore, it did not take into consideration the negative influences that the technological evolution, above all the use of mobile phones in place of more traditional tools, could have on the consumers when acting as investors, and could not foresee the future “gamification” and “socialization” of investments activities.

The European Union is perfectly aware of the possible negative effects of the use of the new investing tools on consumers, and is actively working toward a feasible solution, as can be inferred from the Final Report of January 2020 of Evaluation of Directive 2002/65/EC on Distance Marketing of Consumer Financial Services.

In that Report it is clearly underlined that the way the information is ‘framed’ influences the capacity of consumers to read and understand it, especially in the context of very complex financial services/products. Furthermore, it is pointed out that, because of the widespread adoption of digital devices for the search or purchase of financial products or services (one in three consumers), existing information disclosure requirements should be adapted to these emerging communication channels, so helping consumers to read and understand it.

How and if the European Union will be able to transform this new knowledge into rules efficiently able to protect consumers’ interests remains to be seen.

Shadow banning, downranking and other secret sanctions: on the opacity of non-takedown content moderation remedies

Paddy Leerssen

This paper examines different remedies in content moderation from the perspective of transparency. Platforms are increasingly supplementing their takedown measures, which disable access to content, with alternatives such as demonetization, downranking, delisting and disclaimers. These are considered less restrictive and therefore better suited to the governance of non-unlawful and so-called ‘borderline’ content such as disinformation. However, this paper argues, the acceleration of non-takedown measures comes at underappreciated costs to transparency in content moderation. To this end, this paper analyzes different moderation remedies from both a sociotechnical and legal perspectives. Sociotechnically, the impact of takedown on its users is relatively self-evident, whereas other measures such as downranking can be applied covertly in what amounts to opaque ‘shadow banning’. Legally, despite this, lawmaking on content moderation transparency in such instruments as the EU Digital Services Act (DSA) remains preoccupied with takedown and fails to shed light on its subtler alternatives.

To address this lacuna, this paper considers arguments for and against expanding uploader notice rights such as the DSA’s beyond takedown to cover other forms of content moderation as well. In addition, it explores how non-takedown measures can be documented in entirely new ways, surpassing current frameworks designed primarily with takedown in mind: content-specific reporting and content-adjacent disclaimers. These proposals capitalize on the fact that content affected by non-takedown measures remains accessible, in contrast to taken-down content, such that their impact can be documented in more meaningful detail. Non-takedown content moderation is currently far more opaque than takedown, but it has the potential to be far more transparent.

Using Terms and Conditions to apply Fundamental Rights to Content Moderation: Is Article 12 DSA a Paper Tiger?

João Pedro Quintais, Naomi Appelman & Ronan Fahy

Large online platforms provide an unprecedented means for exercising freedom of expression online, and wield enormous power over public participation in the online democratic space. However, it is increasingly clear that their systems, where (automated) content moderation decisions are taken based on a platform’s terms and conditions (T&Cs), are fundamentally broken. Content moderation systems have been said to undermine freedom of expression, especially where important public interest speech ends up suppressed, such as speech by minority and marginalised groups. Indeed, these content moderation systems have been criticised for their overly vague rules of operation, inconsistent enforcement, and an overdependence on automation. Therefore, in order to better protect freedom of expression online, international human rights bodies and civil society organisations have argued that platforms “should incorporate directly” principles of fundamental rights law into their T&Cs.

Under EU law, platforms presently have no obligation to incorporate fundamental rights into their T&Cs. However, an important provision in the Digital Services Act (DSA) proposal, may change this. Crucially, Article 12 DSA lays down new rules on how platforms can enforce their T&Cs, including that platforms must have “due regard” to the “fundamental rights” of users under the EU Charter of Fundamental Rights. In this paper, we critically examine Article 12 DSA, including proposed amendments. We ask whether it requires platforms to apply EU fundamental rights law and to what extent this may curb the power of Big Tech over online speech. We conclude that, as it stands and until courts intervene, the provision may be too vague and ambiguous to effectively support the application of fundamental rights. Further, although a step in the right direction, we question whether this type of application of fundamental rights in T&Cs will solve the most pressing issues at hand and reign in the power of platforms.

This paper is based on a eponymous chapter in: Heiko Richter, Marlene Straub, Erik Tuchtfeld “To Break Up or Regulate Big Tech? Avenues to Constrain Private Power in the DSA/DMA Package” (October 11, 2021). Max Planck Institute for Innovation & Competition Research Paper No. 21-25, Available at SSRN: https://ssrn.com/abstract=3932809 or http://dx.doi.org/10.2139/ssrn.3932809.

Why is the Netherlands a late adopter of legal technologies?

Linnet Taylor

The Dutch legal system has not been an early adopter of legal AI. Aside from some high-profile experiments such as the automation of environmental permissions decisions, the court system, in particular, has a strong predilection for paper. How did this come about, given that the state of the art is advancing in higher-income countries across the EU and the world? This paper offers an account of why an innovation-oriented country such as the Netherlands has been slow to adopt legal AI, and asks what might change that. The questions we aim to answer are the following: What are the types of legal technology that might be adopted given the state of the art in similar countries? What are the infrastructures that would have to be in place for legal AI to be adopted? And what does this tell us about the future of legal AI in the Dutch legal system?

We will offer a review of the current landscape of legal AI in the Netherlands from an infrastructures perspective (both technical and material infrastructures, and those relating to organisational and human capacity). We will also sketch the institutional and political factors that influence the will to begin on this path. We will use this review to draw conclusions as to the conditions under which legal AI in NL might take off, and to think through what this provides in terms of heuristics for understanding the development of legal AI in different country environments.

Machines that Make and Keep Promises – Lessons for Contract Automation from Algorithmic Trading on Financial Markets

Maria Jose Schmidt-Kessen, Helen Eenmaa & Maya Mitre

An important part of the criticism raised against the adoption of advanced contract automation relates to the inflexibility of automated contracts. Drawing on rational choice theory, we explain why inflexibility, when seen as a constraint, can ultimately not only enhance welfare but also enable cooperation on algorithmic markets. This illuminates the need to address the inflexibility of contracting algorithms in a nuanced manner, distinguishing between inflexibility as a potentially beneficial constraint on the level of transactions, and inflexibility as a set of systemic risks and changes arising in markets employing inflexible contracting algorithms. Using algorithmic trading in financial markets as an example, we show how the automation of finance has brought about institutional changes in the form of new regulation to hedge against systemic risks from inflexibility. Analysing the findings through the lens of new institutional economics, we explain how widespread adoption of contract automation can put pressure on institutions to change. We conclude with possible lessons that algorithmic finance can teach to markets deploying algorithmic contracting.

De digitale algemene vergadering binnen Nederlandse beursvennootschappen: een volwaardig alternatief?

Ismet Öncü

***While the article is written in Dutch, the findings will be presented in English***

Many of us have been members of an association at one time or another—think of a student association, a hockey club, or a political party. As a member, you have the right to attend the annual general meeting. The same holds true for shareholders in a company: at the general meeting, the shareholders discuss the past fiscal year with the company directors and supervisors. After this discussion, a vote takes place on a number of agenda items. Traditionally, this meeting takes place in physical form. However, the COVID-19 crisis has raised questions regarding the possibility (and the need) to digitize the annual general meeting.

Digitalization – currently – is still in its infancy in Dutch corporate law. The legal framework for the digital participation of shareholders leaves many questions unanswered. What happens to the vote, if some shareholders are disconnected? Will the vote still be valid? Should shareholders always have the right to speak online during the general meeting, or is this too laborious if the group is large?

While these matters deserve a sound answer, the first – and thus most important – question must be asked: what are the preconditions for digital meetings of shareholders? The answer to this question is fairly simple: the companies must embed this possibility in its articles of association. But has every company done so, and if so, in what way?

To demystify this matter this paper researched the articles of association of all Dutch listed companies (95 in total). From this, the following findings follow:

1) Of the 95 companies studied, 71 have included the possibility of holding digital meetings in their articles of association.

2) In only 9 Dutch listed companies is it ensured that shareholders can participate in meetings in a comprehensive manner. This means that they can follow the meeting live, vote online and speak digitally. The other companies considered the facilitation of the latter right – in all likelihood – to be technically too difficult and laborious. In those meeting shareholders are only allowed to follow the meeting passively (no live interaction from their side) and vote electronically.

The study illustrates that there is still much room for improvement, also given the fact that further digitization will be inevitable for the future corporate world.